For a blind or visually-impaired person, getting around a large building like a hospital or lab may involve a lot of guesswork and asking for directions. A new system called Navatar created by engineers at the University of Nevada, Reno, uses the sensors in a smartphone to detect progress along a map of a building, allowing for natural navigation that's cheap to boot.

There are more sophisticated indoor-navigation systems, like the new Indoor Positioning System chips, but this one doesn't require any special hardware (other than standard sensors like an accelerometer) and no beacons or cameras need to be installed in the building. And because it doesn't use wireless signals, it can be used in places like hospitals and museums that frown on such transmissions.

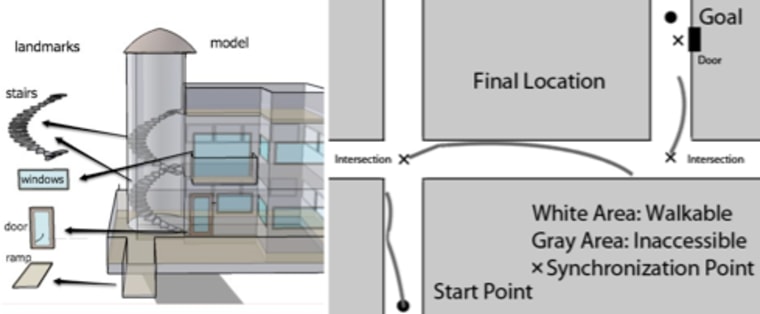

All it needs is a 3-D map of the building, which in this case was created using Google's free SketchUp program, though it could conceivably be created automatically from blueprints or other records. The user puts in their starting point and destination ("South entrance, going to room 243") and the system gives them turn-by-turn directions, all the while counting footsteps and detecting changes in orientation in order to get a rough idea of where the user is in the building.

The creators of the program claim that using the sensors in the phone and the map data, they can locate the user to within six feet, which is more than accurate enough to get someone into the vicinity of their destination. And because it knows the layout of the building, it doesn't have to say "10 feet ahead," but rather says "turn right, then second door on the left," and relies on the person's ability to navigate the environment to fill in the gaps or update the location if necessary.

It wouldn't be just for the visually impaired, either; Eelke Folmer, who leads the project, told me that the technology is easily adaptable for other situations. A fireman navigating a smoky and unfamiliar building, for instance, or just a regular, sighted person trying to find their way around a big place like an airport without relying on battery-sucking wireless.

The bottleneck seems to be the mapping and annotating of environments. It's one thing to build a few models of buildings on campus, another to have thousands of buildings around the country mapped and accessible to the app. That said, it's a powerful approach in its independence from wireless infrastructure, and in how it utilizes both the senses of the user and the "expanded" senses of their device.

More information on the system, along with some papers describing the techniques used, can be found at the Navatar site.

Devin Coldewey is a contributing writer for msnbc.com. His personal website is coldewey.cc.