The New York Police Department abused its facial recognition system by editing suspects' photos ─ and by uploading celebrity lookalikes ─ in an effort to identify people wanted for crimes, researchers charged this week in a blistering report on law enforcement's use of surveillance technology.

The findings, culled from documents obtained in a two-year legal battle with the NYPD, were included in an investigation by the Georgetown Center on Privacy and Technology into police use of facial recognition across the country. The report was released Thursday amid calls by some cities, including San Francisco, to ban police use of the technology altogether, part of a growing resistance to a secretive technique that critics say could increase the risk of mistaken arrests.

"It doesn’t matter how accurate facial recognition algorithms are if police are putting very subjective, highly edited or just wrong information into their systems," said the report's author, Clare Garvie, a senior associate at the Center on Privacy and Technology who specializes in facial recognition. "They're not going to get good information out. They're not going to get valuable leads. There's a high risk of misidentification. And it violates due process if they're using it and not sharing it with defense attorneys."

Facial recognition algorithms compare images of unidentified people to mugshots, booking photos and driver's license photos. Police are adopting the technology for use in routine investigations, saying it helps them solve crimes that otherwise would go cold. They say they use it as an investigative tool and it is not reason alone to arrest someone.

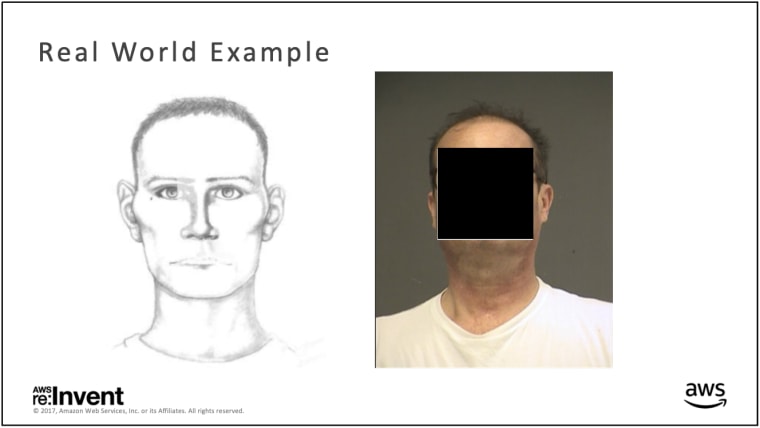

The Georgetown report did not focus exclusively on New York police. It also documented policies that it said seemed to allow officers to submit artists' sketches into facial recognition systems in Maricopa County, Arizona; Washington County, Oregon; and Pinellas County, Florida.

The Washington County Sheriff's Office "has actually never used a sketch with our facial recognition program for an actual case," a spokesman said in a statement. "A sketch has only been used for demonstration purposes, in a testing environment."

A spokesman for the Pinellas County Sheriff's Office responded similarly: "We do not use sketches with our facial recognition system," the spokesman said in a statement.

The Maricopa County Sheriff's Office no longer maintains a facial recognition system but has access to one operated by the state of Arizona, the agency said, adding that the information in the Georgetown report was outdated.

The NYPD figures most prominently in the report.

The department has fought Georgetown's efforts to obtain information about how its facial recognition system works, engaging in a lengthy court skirmish over what documents it can disclose. The department ultimately handed over thousands of pages of documents, but has since tried to get the researchers to return some of them, saying they were confidential and shared inadvertently. Those disputed documents were not used as a source for the report released Thursday, Garvie said.

An NYPD spokesperson said in a statement Thursday that it "has been deliberate and responsible in its use of facial recognition technology" and has used it to solve a variety of crimes, from homicides and rapes to attacks in the city's subway system ─ as well as in investigations of missing or unidentified people. The spokesperson did not dispute anything in the Georgetown report, but said the department is reviewing its "facial recognition protocols."

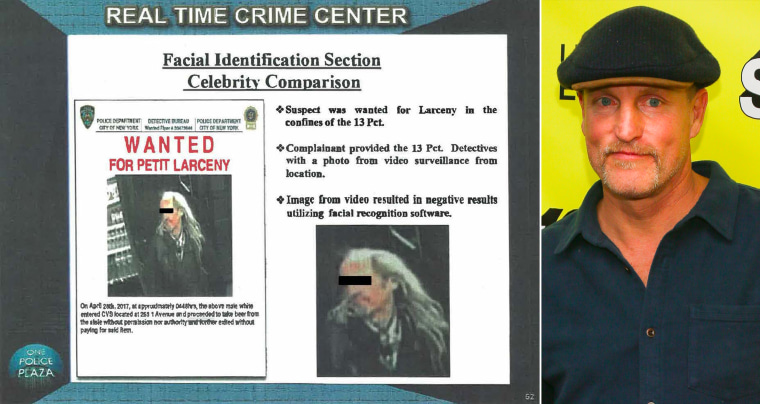

The Georgetown researchers said they found a case in which NYPD investigators were trying to identify a man caught on surveillance footage stealing beer from a CVS. Citing a detective's internal report on the case, the researchers said the surveillance image was not very high quality, and did not produce any potential matches in the facial recognition system. But a detective noted that the suspect looked like actor Woody Harrelson, so a high-resolution image of Harrelson was submitted in the suspect's place. From the new list of results, detectives found one who they believed was a match, leading to an arrest, according to the researchers.

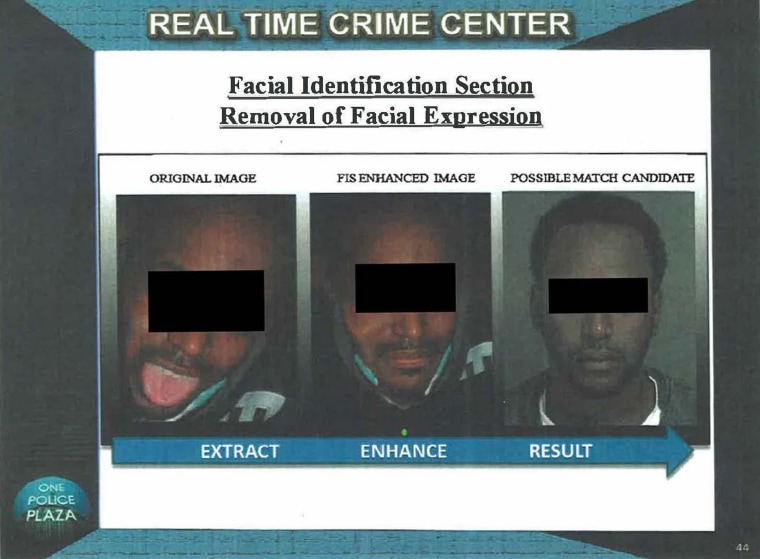

The researchers also found evidence that NYPD investigators doctored images of suspects to make them look more like mugshots ─including replacing an open mouth with a closed mouth by taking photos of a model off Google and pasting that person's lips onto the suspect's image. The department also used 3D modeling software to fill out incomplete images or rotate faces so that they faced forward, the researchers said.

"These techniques amount to the fabrication of facial identity points: at best an attempt to create information that isn’t there in the first place and at worst introducing evidence that matches someone other than the person being searched for," the report says.

The report went on to detail other questionable methods at the NYPD, including failing to use traditional investigative techniques to confirm a facial recognition result.

In a second report, also released Thursday, the Georgetown researchers described plans in Detroit and Chicago to create real-time facial recognition systems by connecting the software to surveillance cameras positioned across the cities. Such systems are seen by critics as the next step in the government's ability to expand surveillance of the public.