WASHINGTON — Want to manipulate the facial expressions of a world leader in real time? How about create a digital copy of a politician’s voice that can be made to say whatever you want? Or maybe even create a completely fraudulent video message that looks and sounds real enough to be shared thousands of times before its authenticity is verified?

There’s a technology for that.

Rapidly advancing tools allowing users to manipulate audio and video have a growing number of lawmakers, technology experts and campaign consultants sounding the alarm about what the future of “fake news” could soon look like. And it doesn’t take much imagination to see the disruptive impact an authentic looking or sounding piece of media could have on politics, diplomacy, the economy and beyond.

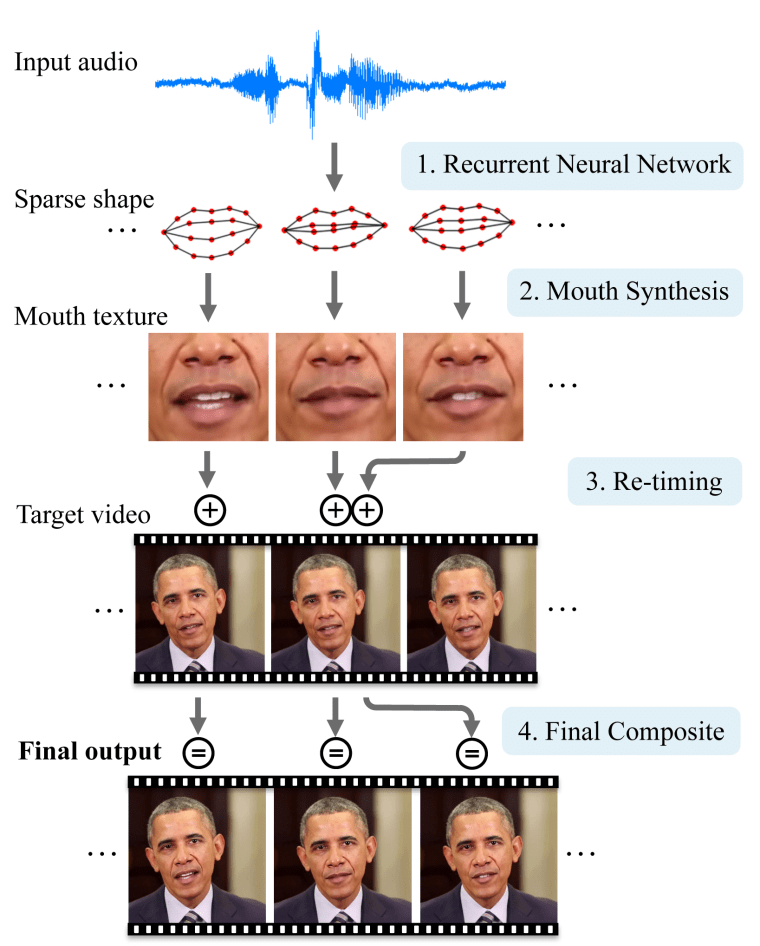

The issue was thrust into the national spotlight last month when Buzzfeed partnered with the filmmaker Jordan Peele to create a video of former President Barack Obama appearing to deliver a public service announcement about the potential impact of manipulated media. Only it wasn’t Obama.

Instead it was Peele’s impersonation of Obama, synced well enough with the former president’s lips to keep viewers open to the possibility it could be authentic until the message itself became a clear parody.

The video showcases both the potential and the limits of where the technology stands. A casual observer could be forgiven at first for mistaking the video for a genuine presidential message, especially if watching on a mobile device. A closer look is less impressive, but it may not be long before the signs of tampering become nearly impossible to pick up with the untrained eye.

Hany Farid, a digital forensics expert at Dartmouth College, said there has been a “startling improvement” over the past year in technology creating “deep fakes,” the artificial intelligence that allows users to swap people’s faces with relative ease.

“If there’s two dozen people in the world who can create fakes, that’s a risk,” Farid said. “But it’s very different than if 2,000 people or 20,000 people or 200,000 people can do the same thing because now it’s at the push of a button.”

Anyone with a mobile phone can now do the types of video effects that once required expensive software and extensive training; convincingly fake video addresses paired well with audio still require a certain level of expertise.

But other threats come from openly available tools like Lyrebird, a company that can create “digital copies” of voices that can be made to say anything, or real-time facial re-enactments like the ones researchers in Germany and at the University of Stanford unveiled in recent years.

“This is terrifying,” Shane Greer, co-owner of the publication “Campaigns & Elections,” told hundreds of digital consultants during a presentation on new forms of fake media at a tech conference in Washington last week.

Greer has delivered the talk to political consultants in the U.S. and Europe, and will soon add Mexico to the list, which is currently dealing with its own fake news problem ahead of elections on July 1.

The technology has already been “weaponized” in at least one instance in the U.S., Greer says, when a doctored animation of Parkland shooting survivor Emma Gonzalez tearing the Constitution quickly swept across conservative social media. In the original image, part of a Teen Vogue story on the youth-led movement to curb gun violence, Gonzalez rips in half a shooting range target, not the Constitution. The fraudulent image was shared thousands of times before Gab, a popular alternative to Twitter among members of the alt-right, called it “obviously a parody/satire."

“Consider what was done with the Emma Gonzalez video now versus where that technology will be two, three and four years from now and what both those in the U.S. and international actors will be able to do to disrupt the democratic process,” Greer said.

The issue is on the minds of lawmakers like Sen. Mark Warner, D-Va., vice chair of the Senate Intelligence Committee. He is helping lead one of the congressional investigations into Russian interference in the 2016 presidential election, and has co-sponsored legislation, stalled so far, aimed at forcing websites like Facebook and Google to disclose more information about political ads appearing on their platforms.

"People will demand action being taken if we don't find a way to collaborate [with technology companies] on this," Warner told NBC News. "And the notion that the market is going to fix this or the tech companies themselves will police this, I just don't think that’s going to be the case."

The Virginia Democrat's concerns go well beyond politics. Warner envisions potentially dire scenarios where a convincing but fake message from the Federal Reserve about interest rates could wreak havoc on world markets, or a manipulated video of a CEO sending a company’s stock into free fall.

“Policy makers and even tech businesses are always going to chase technology,” Warner said. “And in this case, you could really have a catastrophic event happen, and then have a complete overreaction. So I think that’s why I think you need a great sense of urgency.”

Facebook, still reeling from revelations over how it was used to spread misinformation during the 2016 campaign, has focused most of its energy thus far at fact checking article links. But the company announced in March it would begin testing ways to check photos and videos, unveiling a partnership with the French news agency AFP. A spokesperson for Facebook says it hopes to roll out more partnerships in other countries soon.

Company CEO Mark Zuckerberg told lawmakers during an appearance on Capitol Hill last month he wants to work with Congress to develop ways to prevent outside interference on elections and protect user privacy.

“I think there is a recognition among tech leaders that there is a need for well-crafted regulation and that there will be an erosion of public trust if more breaches take place or if there is propaganda that is spread on their sites,” said Rep. Ro Khanna, a Democrat who represents Silicon Valley.

One area of the government that has already begun taking on the threat is the often secretive Defense Advanced Research Projects Agency, or DARPA. The agency, which gave NBC News a look last month at the developing technologies aimed at detecting these “Deep Fakes,” began searching for ways to flag this type of fake media about two years ago, when they first spotted believable looking content manipulations becoming more ubiquitous.

David Doermann, program manager of DARPA's media forensics project, called MediFor, said the goal is to develop a completely automated system that can detect fake images and video and that can be used by any application.

“We're taking the bull by the horns," Doermann told NBC News. "We really feel like, from the research community, this is a problem that nobody else would address at the level that we are."

Doermann has said he hopes to reach a point where every video and image posted to social media sites goes through a verification process. A spokesperson for DARPA said the agency has been in conversations with social media platforms and image-editing manufacturers. The goal is to have the technology ready in two years, in what would be the midst of another presidential campaign.

But Farid, who is working with DARPA to develop these technologies, warned that technology alone will not solve the issue. It is equally the responsibility of the websites where the fake news is spread and the consumers taking in the information.

“As we develop techniques to detect these, the adversary will start using those against us. And then they will use machine learning to design content that will circumvent those systems," Farid said. "There’s no magic solution here."