Rhesus monkeys in a lab are using their brains to move two arms of a virtual primate on a screen, moving researchers one step closer towards outfitting paralyzed humans with with exoskeletons that move like biological limbs.

This double-arm demonstration is a significant first: The two primate test subjects are the only ones to demonstrate control of both limbs directed solely by neural control, using electrodes surgically implanted in their brain.

Leading development of the new brain-computer interface system is Miguel Nicolelis, a neuroscientist at Duke University and a controversial figure in his field for his lofty promise that a paralyzed person wearing a neurally controlled prototype exoskeleton will deliver a kick at the 2014 World Cup finals in Brazil. He says the new work is a key development toward building exoskeletons that paralyzed people can wear and manipulate as if they were healthy limbs.

Virtual bodies controlled by the brain may remind you of "Avatar," James Cameron’s 2010 blockbuster set on the moon Pandora. Realistically, this and similar research "is indeed a far cry from the movie," according to Lee Miller, a Northwestern University researcher unconnected with the new study.

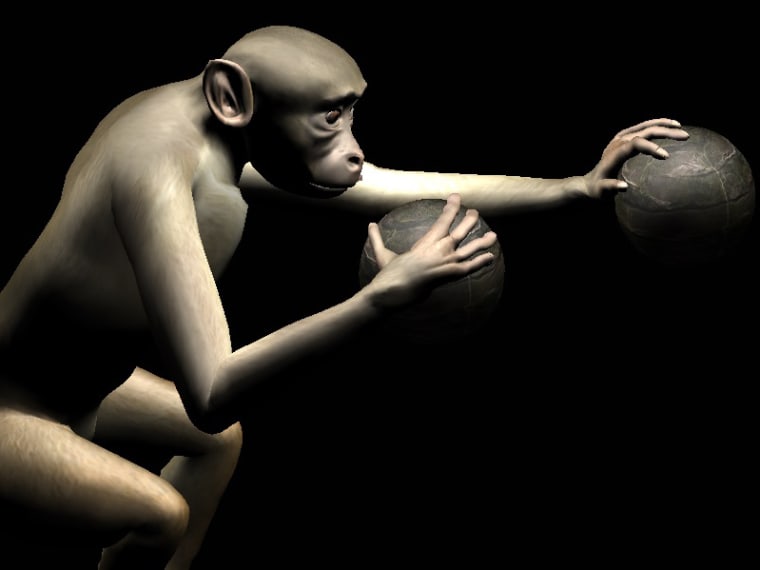

The virtual monkeys don't look very natural, but the real primates seemed to know intuitively how to move the arms on screen, and reach out and "hold" blinking, moving targets.

The monkeys reacted to the avatars "as if it were them in the virtual space," Nicolelis told NBC News. When they held a virtual hand over the target for more than 100 milliseconds, they were rewarded with a sip of fruit juice.

The dual arm work builds on existing work conducted by Nicolelis and others. Other brain-computer interface researchers have observed their lab monkeys understand simulated avatars as well. But so far, demonstrations have only moved a single real robotic arm, or a single virtual arm, or a cursor.

"Where [the system] really does break new ground" is in enabling control of both arms, Miller told NBC News. “No one else that I know of has made any successful effort to control both arms at the same time,” said Miller, whose own research focuses on connecting brain signals with paralyzed limbs rather than robotic or virtual arms. "That’s unique and important," because to enable complete control in humans you'll want them to be able to move two arms, not just one.

Both primate test subjects seemed to intuitively learn the skills, whether they were trained with a joystick first, or not. Do we know what they're thinking? "That's an awfully difficult question to answer because we don’t have Dr. Doolittle handy," Miller says. Still this indicates that we humans may not be too hard for humans to master.

Both monkeys were able to move both arms in four different directions, Nicolelis and team report in a study published in Wednesday edition of Science Translational Medicine

Nicolelis claims that his experiments mark a record number of neurons simultaneously recorded in non-human primates — almost 500 in one monkey. Miller adds that other researchers aren't far behind, measuring about 400 neurons in the primates in their labs.

And similar studies spell hope for future bionic limbs. Targeting a future where prosthetics and exoskeletons will re-mobilize paraplegics, many researchers are training monkeys and people to animate virtual avatars or robotic limbs with neural signals.

Other brain-computer interface researchers at Stanford University and the University of Pittsburgh have demonstrated even better precision of movements in robots and virtual avatars than the two-arm demo, Miller said.

But recent milestones are moving, if not encouraging for bionic limbs. In 2012, a team led by Pitt neurobiologist Andrew Schwartz demonstrated a system that allowed a 58-year-old woman, paralyzed for 14 years, to control a robotic arm and bring her a drink of coffee. In 2011, the same group allowed a person paralyzed by a motorcycle accident to high-five his girlfriend using a mind-controlled robotic arm.

An alternative approach taken by Lee is to re-enervate existing limbs in partially paralyzed patients by connecting the electrodes in the brain to muscle tissue, bypassing an injured spine.

Meanwhile, Nicolelis is characteristically gung-ho about his 2014 World Cup deadline. How's progress? "It’s going — it’s on schedule," he said.

More on brain-machine interfaces:

- How neuroscientists are hacking into brain waves to open new frontiers

- Mind meld? Scientist uses his brain to control another guy's finger

- Two rats, thousands of miles apart, cooperate telepathically via brain implant

Peter Ifft, Solaiman Shokur, Zheng Li, Mikhail Lebedev, and Miguel Nicolelis are authors of "A Brain-Machine Interface Enables Bimanual Arm Movements in Monkeys" published in Science Translational Medicine.

Nidhi Subbaraman writes about technology and science. You can follow her on Facebook, Twitter and Google+.