Computer software is increasingly involved in tasks like winnowing down job applicants' résumés, or deciding whether a bank should grant a home loan. But what if that seemingly neutral algorithm was unwittingly built with human bias baked in?

Researchers have developed a new test to measure whether these decision-making programs can be as biased as humans -- as well as a method for fixing them.

A team of computer scientists from the University of Utah, the University of Arizona and Haverford College presented research last week on a technique that can figure out if these software programs discriminate unintentionally. The group was also able to determine whether a program violated the legal standards for fair access to employment, housing and other situations.

Related: Computers Can Be Hacked to Send Data as Sound Waves: Researchers

The test uses a machine-learning algorithm, which itself is similar to these decision-making computer programs. If the test is able, for example, to accurately predict an applicant's race or gender based on the data provided to the algorithms -- even though race and gender are explicitly hidden -- there is a potential for bias.

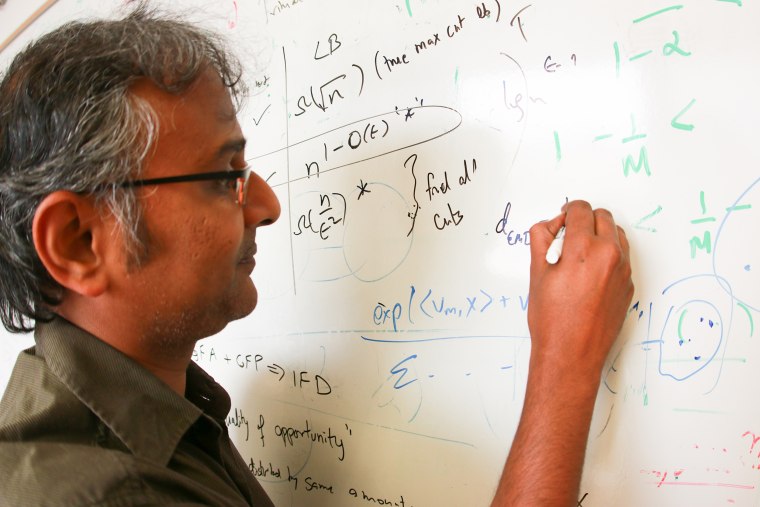

"There’s a growing industry around doing résumé filtering and résumé scanning to look for job applicants ... If there are structural aspects of the testing process that would discriminate against one community ... that is unfair," Suresh Venkatasubramanian, a University of Utah associate professor who led the research, said in a press release.

If the test does reveal a potential issue with the algorithm, Venkatasubramanian said it's easy to redistribute the data being analyzed -- therefore preventing the algorithm from seeing the information that can create the bias.

Related: Facebook Shares Its Internal Anti-Bias Training

"The irony is that the more we design artificial intelligence technology that successfully mimics humans, the more that A.I. is learning in a way that we do, with all of our biases and limitations," Venkatasubramanian said.