Facebook said Wednesday that it is changing the way it recommends groups and will limit the reach of those that break its rules, a move that comes amid scrutiny of the platform's propensity to push some of its users to extremism.

The new policies are part of a continued effort to clean up misinformation and harmful content, the company said in a blog post.

"We know we have a greater responsibility when we are amplifying or recommending content," Tom Alison, Facebook's vice president of engineering, said in the post. "As behaviors evolve on our platform, though, we recognize we need to do more."

Under the new rules, which will apply to its tens of millions of active groups, Facebook will show rule-breaking groups lower in the recommendations bar, making them less discoverable to other users. The more rules a group breaks, the more it will increase restrictions until it is removed completely.

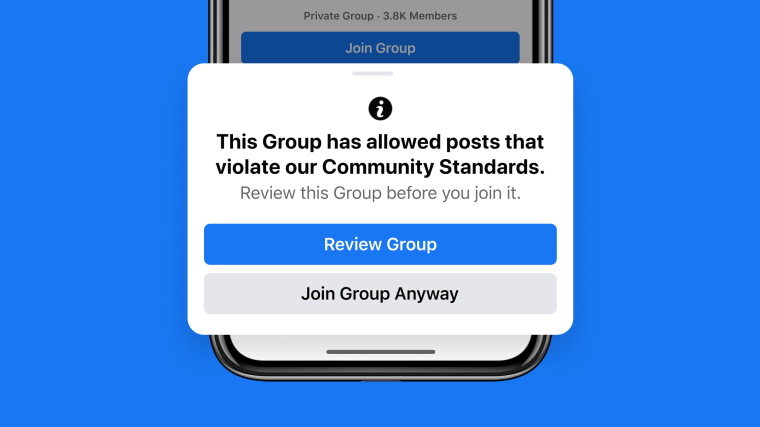

Facebook also plans to inform would-be members of rule-violating groups with a pop-up that warns the group "allowed posts that violate our Community Standards," and suggests a user review the group before joining. For existing group members, it will reduce the reach of rule-breaking groups by giving it lower priority in a user's general news feed.

It will require users in charge of rule-breaking groups — admins and moderators — to more strictly police their communities, approving all posts before publishing when members continue to violate policies. This also extends to new groups made up of users of formerly rule-breaking groups. In effect, this means any new group formed to evade the crackdown on a group repeatedly spreading misinformation would be immediately subject to restrictions.

If an admin or moderator repeatedly approves violating content, the entire group will be removed.

A group member who repeatedly violates rules in groups will be blocked from posting or commenting in any group and barred from creating any new groups.

The move is the most recent and far-reaching expansion of efforts by the social media giant to mitigate harmful content in groups on its platform. In January, CEO Mark Zuckerberg announced Facebook would no longer recommend "civic" and "political" groups, following the Capitol riot. In 2020, it said it would stop recommending "health" groups, and banned militia and QAnon groups entirely.

For years, Facebook has faced criticism that its secret recommendation algorithm pushed users into mostly private echo chambers where racism, conspiracy theories and misinformation flourished. A 2016 internal company report cited by The Wall Street Journal found that a majority of users who joined extremist groups did so because they had been recommended by Facebook.

In 2020, Facebook groups continued to be used to spread misinformation and organize real-world violence. The spaces have been hubs of Covid-19 misinformation and were used by some Capitol rioters to plan their attack.

Zuckerberg announced in 2017 that groups would be the platform's primary feature and set a goal of 1 billion people in "meaningful groups." By the summer of 2020, the company was about halfway to its goal.

As the feature continues to grow, Facebook suggested a scalpel, not an ax, was the best way to combat problems that arise.

"We don't believe in taking an all-or-nothing approach to reduce bad behavior on our platform," Alison said in the statement. "Instead, we believe that groups and members that break our rules should have their privileges and reach reduced, and we make these consequences more severe if their behavior continues - until we remove them completely. We also remove groups and people without these steps in between when necessary in cases of severe harm."

He said the changes would go into effect globally over the next few months.