How smart is your favorite search engine? If the game show "Jeopardy" is a guide, it's just about as smart as the average human.

Computer scientist Stephen Wolfram, the brains behind WolframAlpha, tested how often the correct answers to "Jeopardy" questions appear in the title or text snippets of the results page on Google, Bing, and a handful of other search engines. He didn't include WolframAlpha, his own search engine, because it uses a different type of technology.

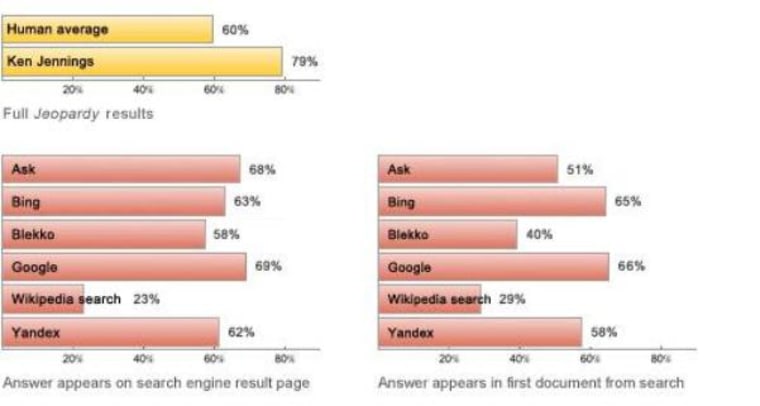

Google displayed the right answer on its result page 69 percent of the time. Ask.com's page had the correct answer 68 percent of the time. Bing registered a 63 percent success rate, and Yandex came in at 62 percent. Blekko (58 percent) and Wikipedia search (23 percent) performed worse than the average human, who gets 60 percent of Jeopardy questions correct.

Of course Ken Jennings, the all-time winning champ of the game show in which players buzz in to provide questions that go with answers displayed on a screen, gets 79 percent correct, meaning that basic search engines have a way to go beat the best in the game.

That's where the IBM Watson supercomputer comes in. Next month, "Jeopardy" will air a series of shows in which the question-answering machine goes head-to-head against Jennings and Brad Rutter, another champ, for a $1 million prize. We already know that Watson bested the two Jeopardy whizzes in a test run this month, and the tournament shows have already been taped. Any bets on who's the winner?

The buzz over the human-vs.-machine match inspired Wolfram to conduct the search engine test as part of a thought exercise comparing his WolframAlpha technology, which is built on a different paradigm, to Watson.

He says IBM's machine is great for answering questions from unstructured data. This has potential real-world applications such as mining medical documents or patents, and doing discovery in litigation, he notes in a blog post about his test.

WolframAlpha technology, on the other hand, can be used to "investigate structured data in completely free-form unstructured ways," he writes. He goes on to explain:

"One asks a question in natural language, and a custom version of WolframAlpha built from particular corporate data can use its computational knowledge and algorithms to compute an answer based on the data — and in fact generate a whole report about the answer."

So where does Wolfram stand on the human-vs.-machine battle? The last line of Wolfram's blog post provides a pretty big hint about where his sympathies lie: "Good luck on 'Jeopardy'! I'll be rooting for you, Watson."

More stories on Watson and Jeopardy:

- Computer beats 'Jeopardy' champs in test round

- Supercomputer plays 'Jeopardy'

- IBM computer taking on 'Jeopardy' champs for $1M

- 'Jeopardy' streak comes to an end

John Roach is a contributing writer for msnbc.com. Connect with the Cosmic Log community by hitting the "like" button on the Cosmic Log Facebook page or following msnbc.com's science editor, Alan Boyle, on Twitter (@b0yle).