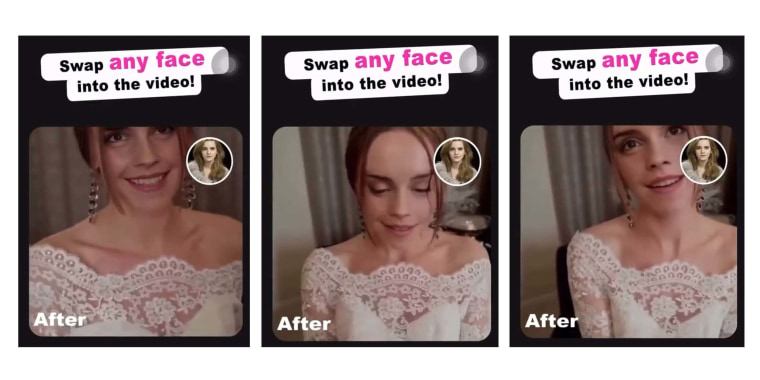

In a Facebook ad, a woman with a face identical to actor Emma Watson’s face smiles coyly and bends down in front of the camera, appearing to initiate a sexual act. But the woman isn’t Watson, the “Harry Potter” star. The ad was part of a massive campaign this week for a deepfake app, which allows users to swap any face into any video of their choosing.

Deepfakes are content where faces or sounds are switched out or manipulated. Commonly, deepfake creators make videos in which celebrities are made to look like they are willingly appearing in them, even though they are not. Increasingly, the technology has been used to make nonconsensual pornography featuring the faces of celebrities, influencers or any person, including children.

The ad campaign on Meta nods to the fact that this once-advanced technology has rapidly spread to readily available consumer applications being advertised on mainstream parts of the internet. Despite many platforms prohibiting manipulative and malicious deepfake content, apps like the ones reviewed by NBC News have been able to slip through the cracks.

On Sunday and Monday, an app for creating “DeepFake FaceSwap” videos rolled out more than 230 ads on Meta’s services, including Facebook, Instagram and Messenger, according to a review of Meta’s ad library. Some of the ads showed what looked like the beginning of pornographic videos with the well-known sound of the porn platform Pornhub’s intro track playing. Seconds in, the women’s faces were swapped with those of famous actors.

When Lauren Barton, a journalism student in Tennessee, saw the same ad on a separate application, she was shocked enough to screen-record it and tweet it out, where it received over 10 million views, according to Twitter’s views counter.

“This could be used with high schoolers in public schools who are bullied,” Barton said. “It could ruin somebody’s life. They could get in trouble at their job. And this is extremely easy to do and free. All I had to do was upload a picture of my face and I had access to 50 free templates.”

Of the Meta ads, 127 featured Watson’s likeness. Another 74 featured the actor Scarlett Johansson’s face swapped with those of women in similarly provocative videos. Neither actor responded to a request for comment.

“Replace face with anyone,” the captions on 80 of the ads read. “Enjoy yourself with AI swap face technology.”

On Tuesday, after NBC News asked Meta for comment, all of the app’s ads were removed from Meta’s services.

While no sexual acts were shown in the videos, their suggestive nature illustrates how the application can potentially be used to generate faked sexual content. The app allows users to upload videos to manipulate and also includes dozens of video templates, many of which appear to be taken from TikTok and similar social media platforms.

The preset categories include “Fashion,” “Bride,” “For Men,” “For Women,” and “TikTok,” while the category with the most options is called “Hot.” It features videos of scantily clad women and men dancing and posing. After selecting a video template or uploading their own, users can input a single photo of anyone’s face, and receive a face-swapped version of the video in seconds.

The terms of service for the app, which costs $8 per week, say it does not allow users to impersonate others via their services or upload sexually explicit content. The app developer listed on the App Store is called Ufoto Limited, owned by a Chinese parent company, Wondershare. Neither company responded to a request for comment.

Meta banned most deepfake content in 2020, and the company prohibits adult content in ads, including nudity, depictions of people in explicit or suggestive positions, or activities that are sexually provocative.

“Our policies prohibit adult content regardless of whether it is generated by AI or not, and we have restricted this Page from advertising on our platform,” a Meta spokesperson said in a statement.

The same ads were also spotted on free photo-editing and gaming apps downloaded from Apple’s App Store, where the app first appeared in 2022 for free for ages 9 and up.

An Apple representative said that the company does not have specific rules about deepfakes but that it prohibits apps that include pornography and defamatory content. Apple said it removed the app from the App Store after having been contacted by NBC News.

The app was also previously available to download for free on Google Play, where it was rated "Teen" for "suggestive themes." Google said Wednesday it removed the app from Google Play after having been contacted by NBC News.

Apple and Google have taken action against similar AI face-swap apps, including a different app that was the subject of a Reuters investigation in December 2021. Reuters found that the app was advertising the creation of “deepfake porn” on pornographic websites. At the time, Apple said it didn’t have specific guidelines around deepfake apps but prohibited content that was defamatory, discriminatory or likely to intimidate, humiliate or harm anyone. While its ratings and ad campaigns have been adjusted, the app that Reuters reported on is still available to download free on Apple’s App Store and Google Play.

The app NBC News reviewed is one of the latest in a boom of freely accessible consumer deepfake products.

When searching “deepfake” on app stores, dozens of apps with similar technological capabilities appear, including ones that promote making “hot” content.

Mainstream examples of the technology show celebrities and politicians doing and saying things they’ve never actually said or done. Sometimes the effects are comical.

However, deepfake technology has overwhelmingly been used to make pornography with nonconsenting stars. As the technology has improved and become more widespread, the market for nonconsensual sexual imagery has ballooned. Some websites allow users to sell nonconsensual deepfake porn from behind a paywall.

A 2019 report from DeepTrace, an Amsterdam-based company monitoring synthetic media online, found that 96% of deepfake material online is of a pornographic nature.

In January, female Twitch streamers spoke out after a popular male streamer apologized for consuming deepfake porn of his peers.

Livestreaming research conducted by the independent analyst Genevieve Oh found that the top website for consuming deepfake porn exploded in traffic following the Twitch streamer’s apology. Oh’s research also found that the number of deepfake pornographic videos has nearly doubled every year since 2018. February had the largest number of deepfake porn videos uploaded in a month ever, Oh said.

While the nonconsensual sharing of sexually explicit photos and videos is illegal in most states, laws addressing deepfake media are in effect only in California, Georgia, New York and Virginia.